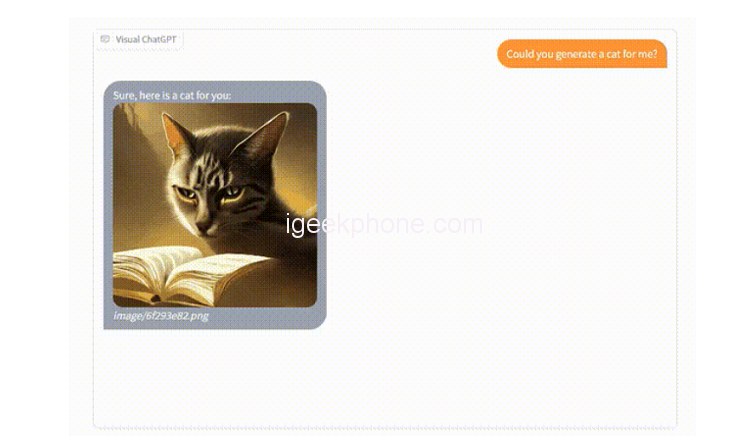

ChatGPT Visual version connects ChatGPT and a series of Visual Foundation Models to enable sending and receiving images during chatting. For example, Ask it: Can you generate a cat slice for me? As we can see, Immediately there are all the text and pictures.

Besides, it can also adjust the picture according to the new text command: replace the cat with a dog.

At the same time, I can understand pictures and have the ability to understand. For example, send a picture to it, and then ask what color is the motorcycle? It answers black.

As mentioned above, it is the visual version of ChatGPT proposed by senior researchers of MSRA. By combining multiple visual models for ChatGPT and utilizing a prompt manager (Prompt Manager), they successfully enabled ChatGPT to handle various visual tasks.

This work became popular as soon as it was released, and the number of stars on GitHub has exceeded 1.5k. A brief summary is the feeling of merging GPT and Dall-E~

Knowing words and drawing pictures… Someone said:

Isn’t this the ultimate meme maker?

The trick is that the prompt works?

Visual ChatGPT, in fact, enables ChatGPT to handle multimodal information. But training a multimodal model from scratch is a lot of work. The researchers thought that some visual models could be combined on the basis of ChatGPT.

To achieve this goal, the key needs an intermediate station. As a result, they proposed the concept of a Prompt Manager.

It has three main functions:

First, clearly tell ChatGPT the role of each visual model, and specify the input and output formats.

Second, convert different visual information, such as converting PNG images, depth images, mask matrices, etc. into language formats, which is convenient for ChatGPT to understand.

Third, process the historical generation results of the visual model, as well as the call priority of different models, avoid conflicts, etc., so that ChatGPT can receive the generated content of the visual model in an iterative manner until the output is satisfactory to the user.

In this way, the workflow of Visual ChatGPT looks like this:

If the user enters a picture, the model will first send the content to the prompt manager, and then convert it into language for ChatGPT to judge. When it finds that the question does not need to call the visual model, it will directly give the output (the first answer).

For the second question, ChatGPT needs to use a visual model to analyze the content of the question, so the visual model will start to execute, and then iterate until ChatGPT judges that it no longer needs to call the visual model, and then the result will be output.

According to the paper, Visual ChatGPT contains 22 different visual models. Including Stable Diffusion, BLIP, pix2pix, etc. In order to verify the ability of Visual ChatGPT, they also conducted a large number of zero-shot experiments (zero-shot experiments).

Results As shown at the beginning, Visual ChatGPT has a strong ability to understand images. The pictures can be continuously generated and modified according to the needs of people.

Of course, the researchers also mentioned that there are still some limitations in this work. For example, the quality of generated results mainly depends on the performance of the visual model. And the use of a large number of hint projects will affect the speed of generating results to a certain extent.

And it is also possible to call multiple models simultaneously, which will also affect real-time performance. Finally, in terms of the privacy and security of input images, further upgrade protection is needed.

MSRA Veteran Goes Out

The results of this research come from the team of Microsoft Research Asia. The corresponding author is Duan Nan.

He is the chief researcher of MSRA, the research manager of the Natural Language Computing Group, a part-time doctoral supervisor of the University of Science and Technology of China, a part-time professor of Tianjin University, and an outstanding member of CCF.

Mainly engaged in research on natural language processing, code intelligence, multimodal intelligence, machine reasoning, etc. He joined MSRA in 2006 and has served for more than 16 years.

The first author, Chenfei Wu, is also a senior researcher. According to LinkedIn information, he joined Microsoft in 2012 and has worked for 11 years. He is currently a software engineer.

Do not forget to follow us on our Facebook group and page to keep you always aware of the latest advances, News, Updates, review, and giveaway on smartphones, tablets, gadgets, and more from the technology world of the future.