Recently, Google’s artificial intelligence model Gemini 1.5 evaluated a video generated by OpenAI Sora, saying that it is visually attractive, but some unreasonable points can be seen that it is not a real video.

As previously reported by Igeekphone, the two sides announced their latest technologies: Google launched Gemini 1.5 Pro, and OpenAI launched the text-to-video tool Sora. The timing of Sora’s release has led to speculation that OpenAI is trying to distract the public from Google’s Gemini 1.5.

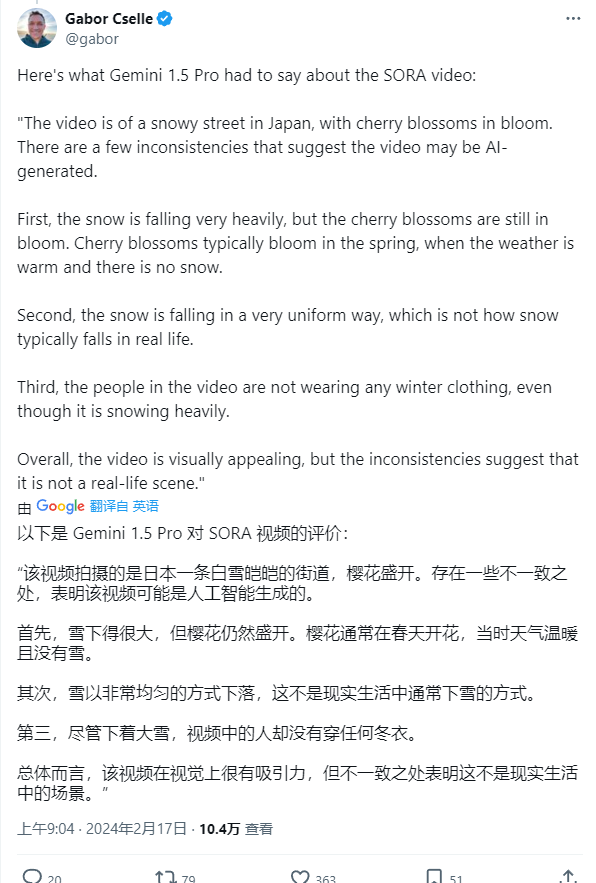

A Google executive shared a detailed analysis of Sora-generated videos on the X platform, and Gemini 1.5 Pro pointed to a Sora-generated video of a street in Japan with both snow and cherry blossoms as an example, pointing out that the video has obvious flaws.

According to Gemini 1.5 Pro’s analysis, the combination of heavy snow and cherry blossoms does not make sense, as cherry blossoms usually bloom in the spring and are not accompanied by snow. And the way the snow falls is very uniform, which is not the usual way of snowfall in real life. In addition, although the characters in the video are in a heavy snow environment, they are not wearing any winter clothes, which is also very inappropriate.

“All in all, the video, while visually appealing, has inconsistencies that suggest it is not a real-life scene,” Gemini 1.5 concluded in its analysis.

Sora is a text-to-video tool capable of generating up to 60 seconds of video, creating richly detailed scenes, complex camera movements, and multi-character images with rich emotions, which many have called the “ChatGPT moment” of video generation.

Google’s Gemini 1.5 has a staggering 1 million term context Windows, far more than the GPT-4 Turbo’s 128,000 and Anthropic Claude 2.1’s 200,000. This means it can process large amounts of information at once, including an hour of video, 11 hours of audio, and a code base of more than 30,000 lines of code, or 700,000 words.