ChatGPT has undergone a remarkable transformation with the latest updates introduced by OpenAI. These updates encompass various significant improvements to the ChatGPT API, particularly the addition of the new function calling capability. This feature empowers developers to utilize external tools within the API, similar to the web version’s plugin functionality. The introduction of function calling marks a groundbreaking development, regarded by many as the most crucial update since the inception of the ChatGPT API.

OpenAI’s commitment to enhancing the ChatGPT API extends beyond the function-calling feature. Each update encompasses vital enhancements, not only expanding the capacity but also reducing the price. Several notable updates have been launched recently, including the new models gpt-4-0613 and gpt-3.5-turbo-0613. The context length of gpt-3.5-turbo has been increased by a staggering 400%, reaching 16k tokens. Furthermore, the price of the gpt-3.5-turbo input tokens has been reduced by 25%. The most advanced embedding model is also available at a significant 75% discount. Additionally, the GPT-4 API has been made available on a large scale until the queue list is cleared. The introduction of these updates triggered considerable discussions among netizens in China, with some perceiving it as a substantial challenge to domestic large-scale models. The API’s autonomy in deciding when to utilize external tools is a notable aspect highlighted by OpenAI. Function calls are supported by both the new versions of GPT-4 and GPT-3.5, and developers only need to describe the required functions to be used by the model. The model itself determines when to call each function based on prompt words, similar to the mechanism of ChatGPT’s plugin calling.

The introduction of these updates triggered considerable discussions among netizens in China, with some perceiving it as a substantial challenge to domestic large-scale models. The API’s autonomy in deciding when to utilize external tools is a notable aspect highlighted by OpenAI. Function calls are supported by both the new versions of GPT-4 and GPT-3.5, and developers only need to describe the required functions to be used by the model. The model itself determines when to call each function based on prompt words, similar to the mechanism of ChatGPT’s plugin calling.

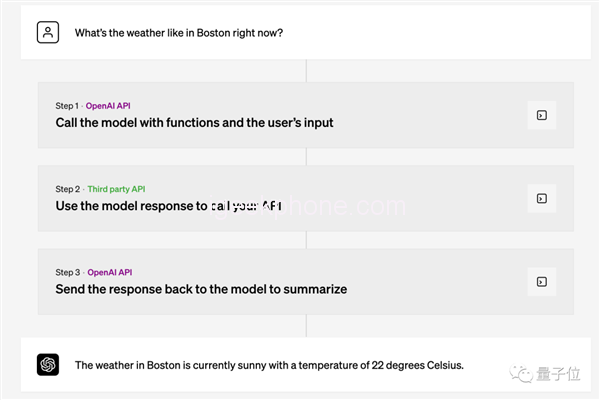

To illustrate the usage of function calling, OpenAI provided three examples. Firstly, a chatbot can call an external API to perform actions or answer questions, such as sending an email or providing weather updates. Secondly, natural language can be converted into API calls or database queries, automatically generating SQL query statements, like retrieving the number of orders from the previous month. Thirdly, structured data can be extracted from text by defining required parameters, such as a person’s name, birthday, and location, and extracting relevant information from a given web page link, such as a Wikipedia article.

This new feature has garnered widespread acclaim from netizens, particularly developers, who anticipate significant improvements in work efficiency. Previously, developers had to rely on LangChain’s tools to enable function calls for GPT, although LangChain theoretically exhibited higher operational efficiency, its reliability fell short compared to the optimally tuned new GPT.

As the latest versions of the models are gradually being iterated, older models are also being phased out. Applications utilizing the initial versions of gpt-3.5-turbo and gpt-4 will be automatically upgraded to the new versions on June 27. Developers requiring more time for transition can manually specify to continue using the old versions; however, all requests using the old versions will be completely discarded after September 13.

In addition to the significant updates, OpenAI has demonstrated a commitment to affordable pricing. Following the upgrade, the price of the widely-used gpt-3.5-turbo (4k token version) has been reduced by 25%. The input tokens now cost $0.0015 per thousand tokens, equating to 666,000 tokens for $1. The output tokens are priced at $0.002 per thousand tokens, amounting to 1 million tokens for $1. In terms of English text, this translates to approximately 700 pages for $1. The price of the embedded model (embeddings model) has undergone a substantial reduction, plummeting by 75%. It now costs a mere $0.0001 per thousand tokens, equivalent to 10 million tokens for $1. Additionally, the newly introduced 16k token version of GPT-3.5-Turbo provides four times the processing power of the 4k version at only double the price. The prices of input and output tokens for the 16k version are $0.003 and $0.004 per thousand tokens, respectively. Some netizens reported a drastic decrease in their monthly bill, from $100 to a few cents, although the specific circumstances remain unclear.

While the updates introduced by OpenAI have garnered significant attention, they have also drawn comparisons to LangChain, an open-source development framework for large models. Netizens have noticed similarities between OpenAI’s new “function call” feature and LangChain’s “Tools.” Speculations have emerged that OpenAI might replicate additional functions from LangChain, such as Chains and Indexes. Notably, LangChain recently secured $10 million in seed round financing, indicating its strong drive to thrive amidst these developments.

Within minutes of OpenAI’s official update, LangChain swiftly announced its commitment to compatibility and released a new version that not only supports the official functions but also converts developer-written tools into OpenAI functions. The speed at which LangChain responded to these developments was commendable, leaving netizens in awe of its agility. Amidst the rapid pace of development, questions naturally arise regarding OpenAI’s stance toward entrepreneurial projects. OpenAI CEO Sam Altman recently addressed this concern, stating that they aim to avoid competing with customers, except for ChatGPT-type consumer-grade applications. However, it appears that development tools fall outside the scope of this non-competitive approach.

Another key player in the equation is Microsoft, the largest funder of OpenAI, which provides ChatGPT API services through Azure cloud. Developers who switched from the OpenAI official API to Microsoft Azure have reported significant improvements in performance. Latency, for instance, was reduced from 15 seconds to 3 seconds in terms of median, and from 60 seconds to 15 seconds in terms of 95th percentile. The average number of tokens processed per second tripled from 8 to 24. Furthermore, with certain discounts offered by Azure, the service is even more cost-effective than before. However, Microsoft Azure’s update rate tends to be slower than OpenAI’s.

As a result, developers have devised a strategy of leveraging OpenAI for rapid iteration during the development phase and transitioning to Microsoft Azure for large-scale deployment. This approach allows them to benefit from OpenAI’s agile updates while capitalizing on the improved performance and cost-effectiveness of Azure.

In conclusion, OpenAI’s recent updates to the ChatGPT API have brought forth transformative changes, with the introduction of function calling being the standout feature. These updates encompass not only expanded capacity but also reduced pricing, catering to developers’ needs and improving work efficiency. While comparisons to LangChain have arisen, OpenAI’s commitment to avoiding competition with customers, except in consumer-grade applications, remains evident. With Microsoft’s involvement as a significant funder of OpenAI, Azure cloud services have provided developers with improved performance and cost-effectiveness. As the landscape continues to evolve, developers are finding strategies to capitalize on the strengths of both OpenAI and Microsoft Azure for optimal results in their projects.

Read Also: How ChatGPT Can Help You, Trade Stocks, Like a Pro

Do not forget to follow us on our Facebook group and page to keep you always aware of the latest advances, News, Updates, review, and giveaway on smartphones, tablets, gadgets, and more from the technology world of the future